When I started learning about AI and neural networks, I wanted to truly understand how they work — not just copy-paste code. This blog documents my learning journey through Artificial Neural Networks (ANN), Deep Neural Networks (DNN), CNNs, and beyond, with real questions I asked and answers that finally made things click.

🎯 What is an ANN?

An Artificial Neural Network (ANN) is inspired by how our brain works. At its core, it’s a mathematical function that learns patterns from data.

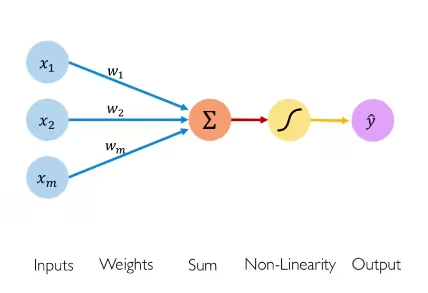

The simplest form? A single neuron that does this:

y = w × x + bWhere:

- x → input (e.g., hours studied)

- w → weight (importance of the input)

- b → bias (starting offset)

- y → predicted output (e.g., exam score)

The magic: The ANN’s job is to learn the best values for w and b automatically!

🔥 My First ANN Project: Predicting Exam Scores

I built my first neural network to predict exam scores based on study hours. Here’s the data:

| Hours Studied | Score |

|---|---|

| 1 | 50 |

| 2 | 55 |

| 3 | 60 |

| 4 | 65 |

| 5 | 70 |

| 6 | 75 |

The Code (Just 15 Lines!)

import tensorflow as tf

import numpy as np

# Input data

hours = np.array([1, 2, 3, 4, 5, 6], dtype=float)

scores = np.array([50, 55, 60, 65, 70, 75], dtype=float)

# Build ANN (1 neuron)

model = tf.keras.Sequential([

tf.keras.layers.Dense(1, input_shape=[1])

])

# Compile model

model.compile(optimizer='sgd', loss='mse')

# Train

model.fit(hours, scores, epochs=500, verbose=0)

# Predict

print("Predicted score for 8 hours:", model.predict([[8.0]]))Result: The model predicts ~80 for 8 hours of study! 🎉

🤔 Questions I Asked (And Finally Understood)

Q: What does Dense(1, input_shape=[1]) mean?

Answer: This creates ONE neuron that:

- Takes 1 input value

- Has 1 weight (

w) and 1 bias (b) — created automatically - Outputs:

output = w × input + b

Think of it as the simplest possible “brain cell” that can learn.

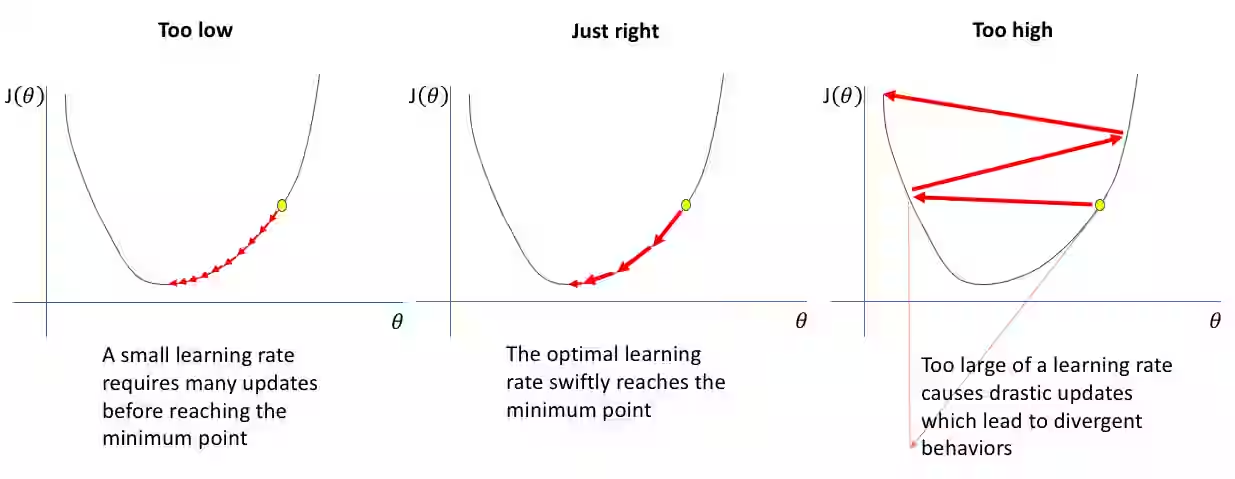

Q: What is an Optimizer? What does learning_rate=0.01 mean?

Answer: The optimizer decides how the model updates its weights to reduce error.

Think of walking down a foggy hill to find the lowest point:

- Position = current weight

- Height = loss (error)

- Step size = learning rate

optimizer = tf.keras.optimizers.SGD(learning_rate=0.01)This means: “Move 1% of the gradient distance at every update.”

| Learning Rate | Effect |

|---|---|

| Very small (0.0001) | Learns slowly, but stable 🐢 |

| Good (0.01) | Balanced, fast convergence ✅ |

| Too large (1.0) | Jumps over minimum, unstable ❌ |

Q: What does the model actually learn?

After training, I inspected the learned values:

w, b = model.layers[0].get_weights()

print("Weight:", w) # ≈ 5

print("Bias:", b) # ≈ 45So the ANN learned: score ≈ 5 × hours + 45

🔥 This is intelligence — nothing was hardcoded!

Q: Can I change the number of neurons and layers?

Yes! And this is how ANNs become more powerful:

# Single neuron (ANN)

Dense(1)

# Multiple neurons

Dense(4, activation='relu')

# Multiple layers (DNN)

model = tf.keras.Sequential([

tf.keras.layers.Dense(8, input_shape=[1], activation='relu'),

tf.keras.layers.Dense(4, activation='relu'),

tf.keras.layers.Dense(1)

])Important: More neurons ≠ automatically smarter. For simple linear problems, 1 neuron is perfect!

Q: What are “hierarchical features”?

Answer: Deep networks learn simple things first, then combine them into complex patterns across layers.

Example — How a network recognizes a face:

- Layer 1: Edges & lines

- Layer 2: Shapes (eyes, nose, mouth)

- Layer 3: Complete face

This is why deep learning works — it builds understanding layer by layer! 🧠

📊 ANN vs DNN — What’s the Difference?

| Feature | ANN | DNN |

|---|---|---|

| Layers | 0–1 hidden | 2+ hidden ✅ |

| Neurons | Few | Many ✅ |

| Pattern learning | Simple | Complex ✅ |

| Use case | Linear problems | Images, text, complex data |

Key insight: All DNNs are ANNs, but not all ANNs are deep!

🔄 How Learning Actually Happens

- Initialize random weights (

w) and bias (b) - Forward pass: Predict output using current weights

- Calculate loss: How wrong was the prediction?

- Backpropagation: Compute gradients (which direction to adjust)

- Update weights: Move opposite to error direction

- Repeat for many epochs

After 500 epochs → the model finds the best w and b!

🧪 Experiments That Built My Intuition

Try these yourself:

| Experiment | What Happens |

|---|---|

| Reduce epochs to 10 | Bad prediction (not enough learning) |

| Increase epochs to 1000 | Better fit, but watch for overfitting |

| Change learning rate to 0.0001 | Very slow learning |

| Change learning rate to 1.0 | Loss explodes! ❌ |

| Add noisy data | Model still learns the pattern |

🚀 What I Learned (Key Takeaways)

✅ Neurons are simple math: w × x + b

✅ Training = finding the best weights automatically

✅ Loss function measures how wrong predictions are

✅ Optimizer decides how to update weights

✅ Learning rate controls step size

✅ Depth (more layers) enables learning complex patterns

📁 Try It Yourself

I’ve open-sourced this project! Check out the full code:

🔗 GitHub: ANN Predict Exam Score

🎯 What’s Next on My AI Journey?

This was just the beginning! My learning roadmap:

- ✅ ANN — Predict exam scores (DONE!)

- 🔄 DNN — Classify handwritten digits (MNIST)

- 🔄 CNN — Image classification (Cats vs Dogs)

- 🔄 RNN/LSTM — Text and sequence prediction

- 🔄 Transfer Learning — Using pretrained models

Each step builds on the previous one. Stay tuned for more posts documenting my AI learning journey!

Have questions about neural networks? Feel free to reach out — I’m learning too, and explaining helps solidify understanding! 🧠✨