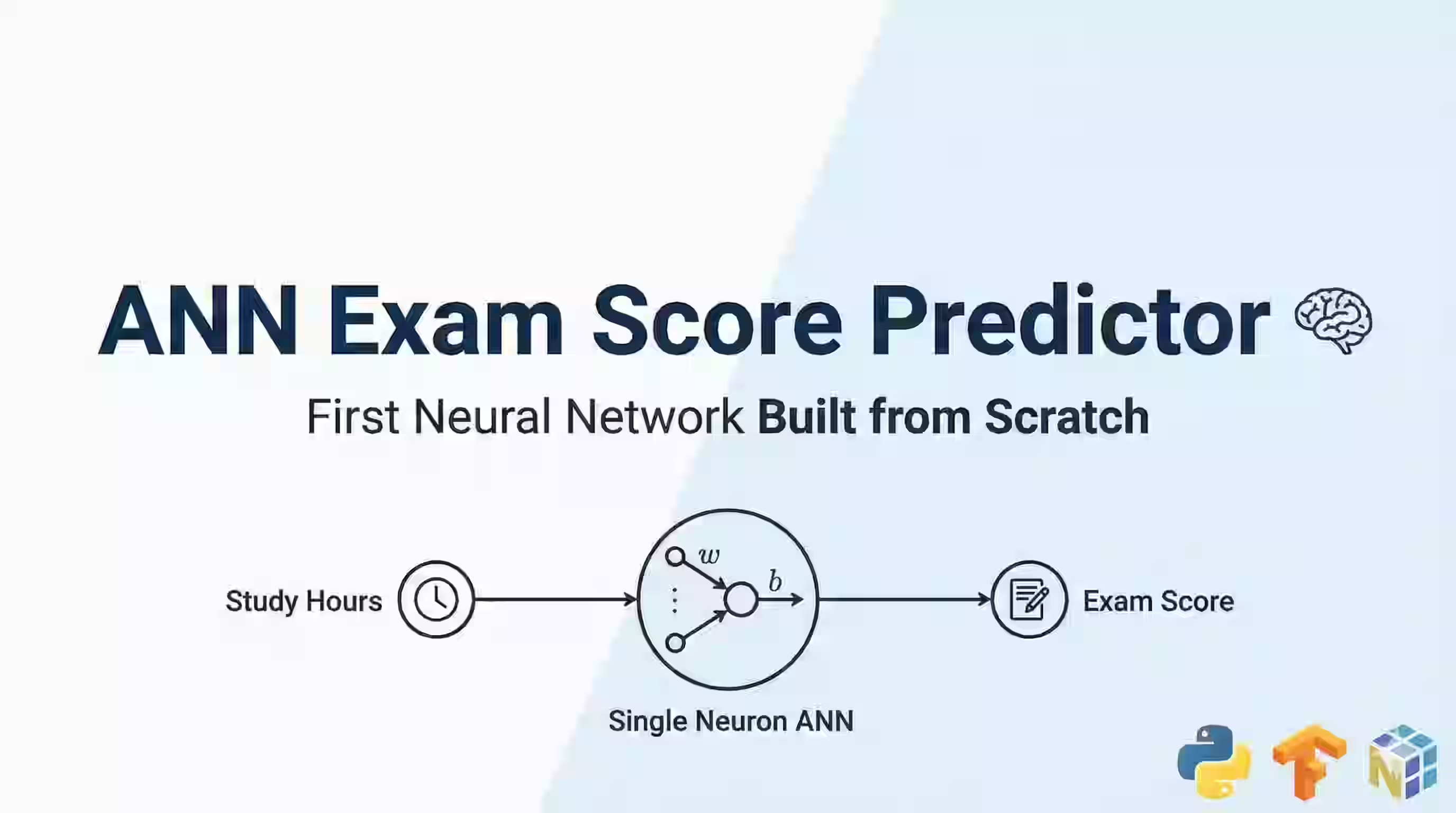

ANN Exam Score Predictor

My first neural network — a simple ANN that predicts exam scores based on study hours. Built from scratch to understand how neural networks actually learn.

Technologies

Project Overview

This is my first neural network project — a simple Artificial Neural Network (ANN) that predicts exam scores based on the number of hours studied. Built from scratch using TensorFlow to truly understand how neural networks learn.

The goal wasn’t just to run code, but to understand:

- What a neuron is

- How weights and biases work

- What loss functions do

- How gradient descent optimizes the model

🎯 The Problem

Given a simple dataset of study hours and corresponding exam scores, can we build a model that predicts the score for any number of study hours?

| Hours Studied | Exam Score |

|---|---|

| 1 | 50 |

| 2 | 55 |

| 3 | 60 |

| 4 | 65 |

| 5 | 70 |

| 6 | 75 |

💡 The Solution

A single-neuron ANN that learns the relationship:

score = weight × hours + biasThe network discovers that weight ≈ 5 and bias ≈ 45, meaning:

score ≈ 5 × hours + 45🔧 Technologies Used

- Python 3.8+ — Programming language

- TensorFlow/Keras — Neural network framework

- NumPy — Numerical computations

📊 Key Concepts Learned

1. Single Neuron Architecture

model = tf.keras.Sequential([

tf.keras.layers.Dense(1, input_shape=[1])

])2. Training with Gradient Descent

model.compile(optimizer='sgd', loss='mse')

model.fit(hours, scores, epochs=500)3. Inspecting Learned Parameters

w, b = model.layers[0].get_weights()

# Weight ≈ 5, Bias ≈ 45🧪 Experiments Included

The project includes experiments to build intuition:

✅ Learning Rate Impact — See how different rates affect training

✅ Epoch Count — Understand when to stop training

✅ Weight Inspection — Verify what the model learned

✅ Prediction Testing — Test on unseen data

📈 Results

- Training: 500 epochs with SGD optimizer

- Prediction for 8 hours: ~80 points ✅

- Model learned:

score = 5 × hours + 45

🚀 What This Project Taught Me

- Neural networks are not magic — they’re math + optimization

- A single neuron can solve linear problems perfectly

- The learning rate is crucial for stable training

- Loss functions guide the learning process

- Understanding the basics makes complex networks easier

🔗 Links

- GitHub Repository — Full source code

- Blog Post: Understanding ANN — Detailed explanation of concepts

This project is part of my AI/ML learning journey. Next up: Deep Neural Networks for image classification!