DNN Handwritten Digit Classifier

A Deep Neural Network that recognizes handwritten digits (0-9) with 97%+ accuracy. Built using TensorFlow on the MNIST dataset to understand multi-layer networks and hierarchical feature learning.

Technologies

Project Overview

This is my second neural network project — a Deep Neural Network (DNN) that recognizes handwritten digits from the famous MNIST dataset. Building on my ANN knowledge, this project explores what happens when we add depth to neural networks.

The goal was to understand:

- How multiple layers learn hierarchical features

- Why non-linearity (ReLU) is essential

- How softmax enables multi-class classification

- The difference between ANN and DNN

🎯 The Problem

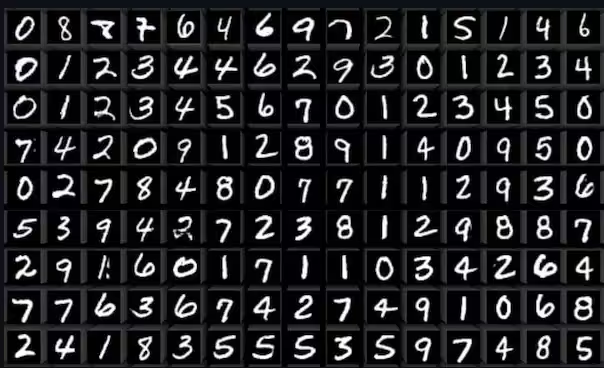

Given a 28×28 pixel grayscale image of a handwritten digit, classify it as one of 10 classes (0-9).

Dataset: MNIST

- 60,000 training images

- 10,000 test images

- 28×28 pixels, grayscale

💡 The Solution

A 4-layer DNN architecture:

model = tf.keras.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])Layer-by-Layer Breakdown

| Layer | Purpose | Output Shape |

|---|---|---|

| Flatten | Convert 2D image → 1D vector | 784 |

| Dense(128, relu) | Learn simple patterns (edges) | 128 |

| Dense(64, relu) | Learn complex patterns (shapes) | 64 |

| Dense(10, softmax) | Output probabilities for 0-9 | 10 |

🔧 Technologies Used

- Python 3.8+ — Programming language

- TensorFlow/Keras — Deep learning framework

- NumPy — Numerical computations

- MNIST Dataset — Built-in TensorFlow dataset

📊 Key Concepts Demonstrated

1. Data Normalization

x_train = x_train / 255.0 # Scale pixels to 0-12. ReLU Activation (Non-linearity)

activation='relu' # max(0, x)3. Softmax for Multi-class Output

Dense(10, activation='softmax') # Probabilities sum to 14. Adam Optimizer

optimizer='adam' # Adaptive learning rate5. Categorical Cross-Entropy Loss

loss='sparse_categorical_crossentropy' # For integer labels📈 Results

| Metric | Value |

|---|---|

| Training Accuracy | ~98% |

| Validation Accuracy | ~97% |

| Test Accuracy | ~97-98% |

| Epochs | 5 |

| Training Time | ~30 seconds |

🧠 What Each Layer Learns

Flatten → Raw pixel values

Dense(128) → Edges, simple curves

Dense(64) → Digit parts (loops, lines)

Dense(10) → Final classification probabilitiesThis is hierarchical feature learning — building complex understanding from simple patterns!

🚀 What This Project Taught Me

- Depth enables complexity — more layers = more abstract features

- Non-linearity is essential — ReLU allows learning complex patterns

- Softmax outputs probabilities — perfect for classification

- Normalization matters — raw pixels hurt training stability

- Adam > SGD for most deep learning tasks

- Overfitting happens — regularization is important

🔗 Links

- GitHub Repository — Full source code

- Blog Post: From ANN to DNN — Detailed explanation

This project is part of my AI/ML learning journey. Next up: Convolutional Neural Networks for image classification!