RNN — Next Character Prediction

Simple RNN project to predict the next character in a sequence. Learns character patterns from text using SimpleRNN.

Technologies

What I Did

Built an RNN that predicts the next character in a sequence.

Approach: Use SimpleRNN layer to process character sequences and learn patterns.

Example:

- Input: “hell”

- Output: “o”

How It Works

Step 1: Tokenize characters

- Convert characters to integers

- Build vocabulary mapping

Step 2: Create sequences

- Slide window over text

- Each sequence predicts next character

Step 3: Train RNN

- SimpleRNN processes each character

- Hidden state carries memory

- Dense layer predicts next character

Step 4: Generate text

- Feed predictions back as input

- Generate character by character

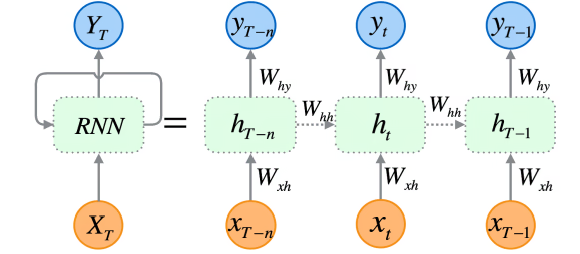

Key Concept: Hidden State (Memory)

RNN’s power: hidden state carries memory from previous steps

Input: "h" → hidden state = [memory of "h"]

Input: "e" → hidden state = [memory of "he"]

Input: "l" → hidden state = [memory of "hel"]

Input: "l" → hidden state = [memory of "hell"]Each step remembers previous context.

Architecture

- Embedding: Convert character indices to vectors

- SimpleRNN: Process sequences with memory

- Dense: Predict next character probability

Main Learning Points

✅ Sequential data has order

✅ Hidden state = neural memory

✅ Time steps = processing one element at a time

✅ Vanishing gradient problem exists (even with RNN)

✅ LSTM/GRU are better for longer sequences

✅ Text generation = predict one character, feed back

What Happens Inside

Each time step:

new_hidden = activation(input_weights × current_char + hidden_weights × previous_hidden)This recurrence is why it’s called Recurrent Neural Network.

Limitations

- SimpleRNN struggles with long sequences (vanishing gradient)

- Can only see past, not future

- Slow compared to Transformers

GitHub

🔗 RNN-Project-Next-Character-Prediction

See the repository for full implementation.

RNNs have memory. That’s what makes them powerful for sequences. 🚀