Transfer Learning with CNN — Cats vs Dogs v2

EfficientNetB0 + Transfer Learning on cats vs dogs dataset. Feature extraction + fine-tuning approach. Model deployed on Hugging Face.

Technologies

What I Did

Built a Transfer Learning model using EfficientNetB0 backbone to classify cats vs dogs.

Trained using two-phase approach:

- Feature Extraction Phase: Freeze backbone, train classification head

- Fine-tuning Phase: Unfreeze backbone layers, retrain with small learning rate

Results

| Metric | Accuracy |

|---|---|

| After Feature Extraction | ~50.98% |

| After Fine-tuning | ~70.52% |

Dataset

Dataset: Microsoft Cats vs Dogs (microsoft/cats_vs_dogs)

- ~23,000 training images

- ~5,800 validation images

- 2 classes: Cat, Dog

Model Architecture

Base Model: EfficientNetB0 (pre-trained on ImageNet) Head: GlobalAveragePooling2D + Dropout + Dense layer

Training Strategy:

- Phase 1: Freeze base, train head (feature extraction)

- Phase 2: Unfreeze and fine-tune with tiny learning rate

Key Learning: What is Transfer Learning?

Transfer learning means reusing weights from a model already trained on millions of images (ImageNet) instead of training from scratch.

Why it works:

- Pre-trained backbone already learned edges, textures, shapes

- We reuse that knowledge for our specific task (cats vs dogs)

- Much faster and requires less data than training from scratch

Two phases:

- Freeze base + train head: Get ~50% accuracy fast

- Fine-tune base + head: Improve to ~70% accuracy

Links

🔗 GitHub: Tranfer-Lrearning-with-CNN

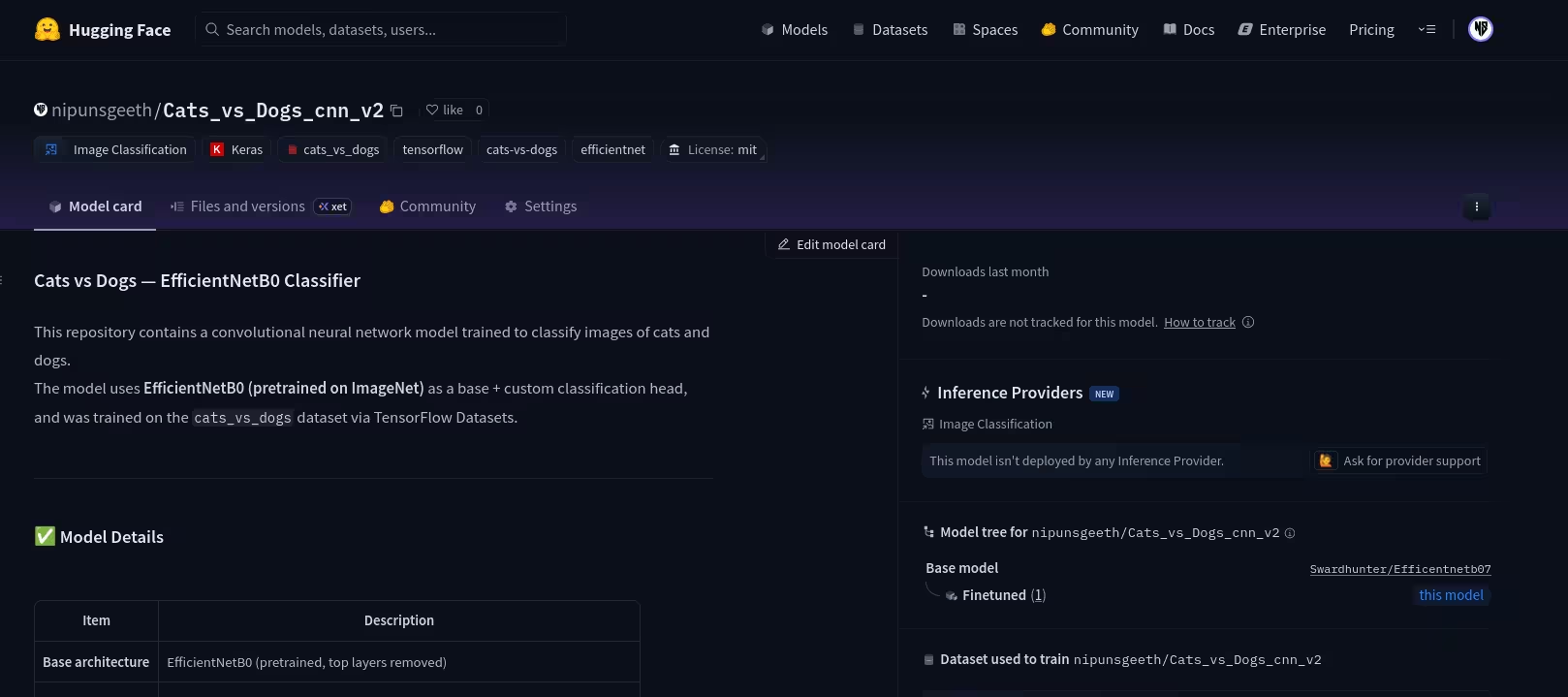

🔗 Model on Hugging Face: nipunsgeeth/Cats_vs_Dogs_cnn_v2

Transfer learning is the practical way to do deep learning — reuse pre-trained knowledge. 🚀